You’re seamlessly browsing on your phone, tapping through a sleek app with intuitive gestures and crystal-clear navigation. Then you switch to your laptop, open the same service on the web—and suddenly everything feels off. Buttons are in different places, flows are clunky, and features you loved on mobile are buried or missing entirely. It’s jarring, frustrating, and all too common in today’s fragmented digital world.

Now amplify that frustration across an entire enterprise ecosystem. Generative AI tools are flooding digital spaces with an explosion of content—text, images, videos, and interactive elements created at lightning speed. This mirrors the recent surge in user-generated assets powered by AI marketing innovations, where brands are churning out personalized visuals and copy en masse. The result? Interfaces that were once carefully crafted are now overwhelmed by inconsistent, rapidly generated elements that don’t always play nice together.

What Is User Experience (UX) Design?

At its core, user experience design is the disciplined process of enhancing user satisfaction by improving the usability, accessibility, and overall pleasure provided in the interaction with a product or service. It’s not just about making things look pretty—it’s about ensuring every touchpoint feels intuitive, efficient, and delightful, whether on a mobile app, website, or enterprise dashboard. Great UX anticipates user needs, reduces friction, and builds loyalty; poor UX leads to abandonment and lost opportunities.

Shining a Light on the Awareness Stage of the Buyer’s Journey

In the buyer’s journey, the awareness stage is all about recognition—identifying that a problem exists before rushing to fixes. This article zeroes in on that exact moment for UX design challenges. We’re not diving into solutions here (that’s for another day). Instead, we’ll highlight common pain points that many teams and projects encounter, particularly as generative AI accelerates complexities across digital products.

With AI-driven tools automating content creation and even elements of design itself—from generative images to dynamic layouts—the pace of change is exposing long-standing vulnerabilities. Enterprises are grappling with inconsistencies at scale, where AI-amplified assets introduce variability that traditional UX processes struggle to contain. Emerging trends, such as automated compliance tools for managing digital assets, underscore how rapid technological advancements are laying bare these gaps, making it harder to maintain cohesive experiences.

If you’ve ever felt your app or platform drifting into inconsistency, or noticed user drop-off tied to subtle frustrations, you might be experiencing these pain points firsthand. Recognizing them is the critical first step toward addressing the growing chaos in our AI-fueled digital landscape. Stay tuned as we unpack the most prevalent issues next.

The Core Problems in Modern User Experience Design

As generative AI accelerates the creation and deployment of digital content, it’s shining a harsh light on longstanding UX challenges. What once might have been manageable inconsistencies or oversights are now scaling rapidly, turning minor frictions into major barriers. Below, we explore three prevalent pain points that many teams are recognizing in this new era.

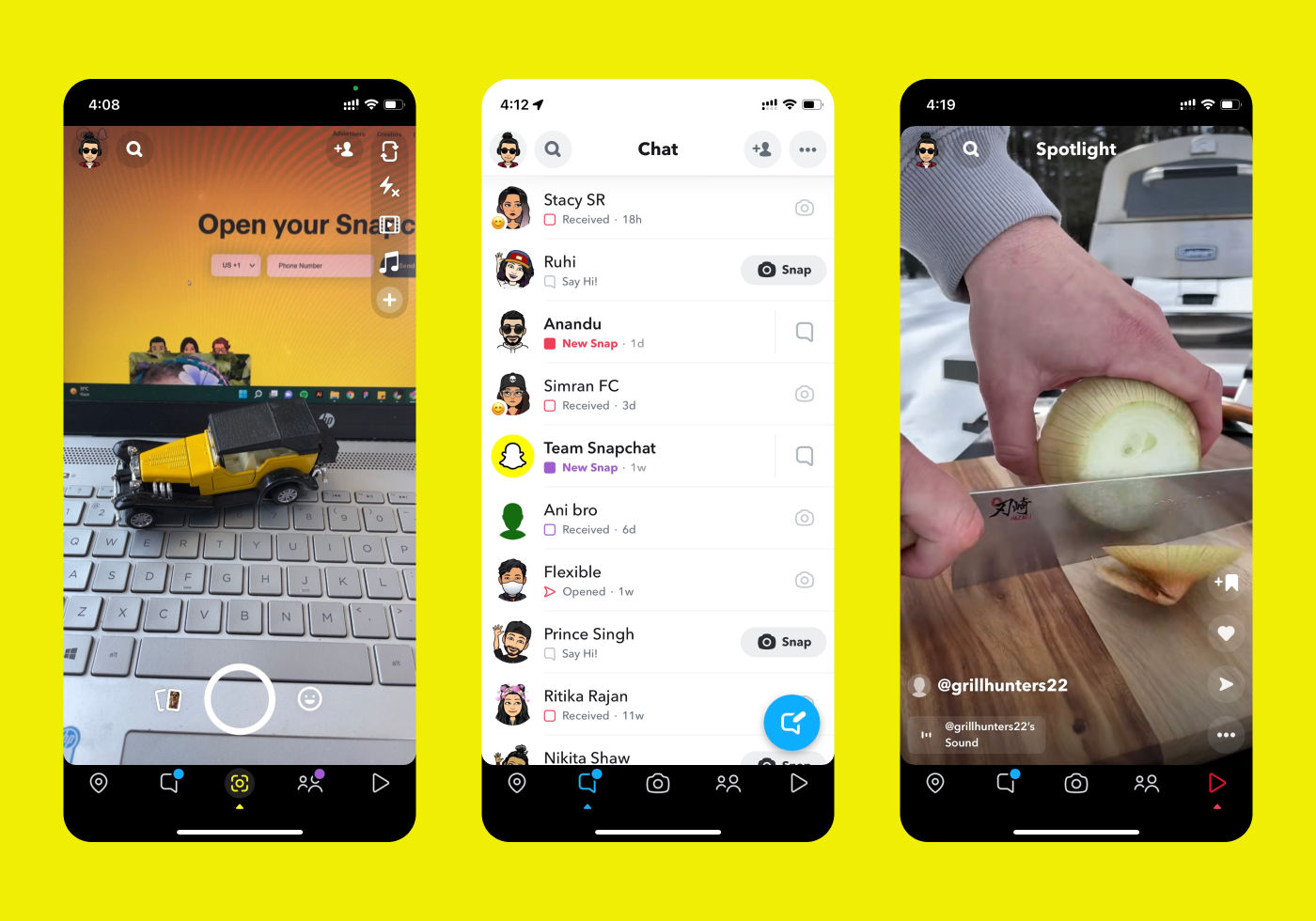

Inconsistency Across Digital Touchpoints

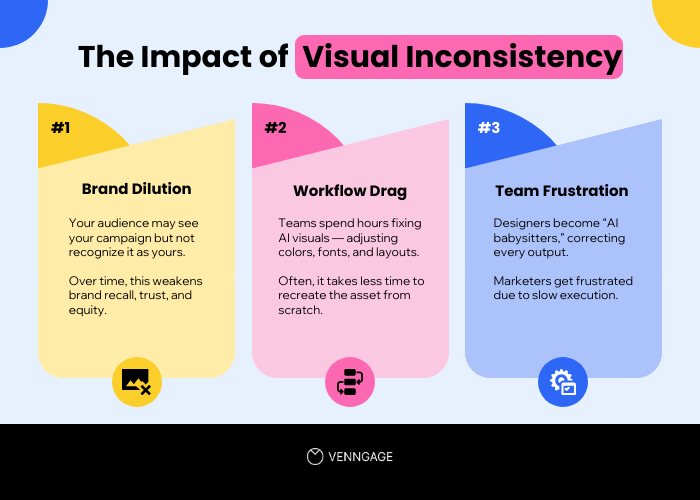

Users expect seamless journeys no matter where they interact with a brand—whether on a mobile app, desktop website, or emerging AI-powered interfaces like chatbots or voice assistants. Yet fragmented experiences remain rampant: mismatched branding elements (colors, fonts, logos shifting unexpectedly), divergent navigation patterns (menus in different locations or behaviors), or varying functionality (features available on one platform but buried or absent on another).

These discrepancies create confusion, forcing users to relearn interfaces and eroding confidence in the product. In an AI-driven landscape, this issue intensifies as generative tools produce content at scale—often introducing subtle variations in tone, layout, or visuals that don’t align across channels. Without strong governance, AI outputs can replicate or amplify inconsistencies, much like unmonitored generative content in marketing leading to off-brand messaging or diluted distinctiveness.

Symptoms to watch for:

- High bounce rates, especially on mobile-to-desktop transitions or cross-device sessions.

- Spikes in negative user feedback mentioning frustration or confusion.

- Reduced engagement metrics, such as lower time-on-site or fewer multi-page sessions.

Broader implications for enterprises: Brand dilution erodes customer loyalty over time, while lost trust translates to higher churn. Similar to the risks of non-compliant or inconsistent assets wasting marketing budgets in global teams, these UX gaps can undermine investments in AI personalization, turning potential advocates into detractors.

.webp)

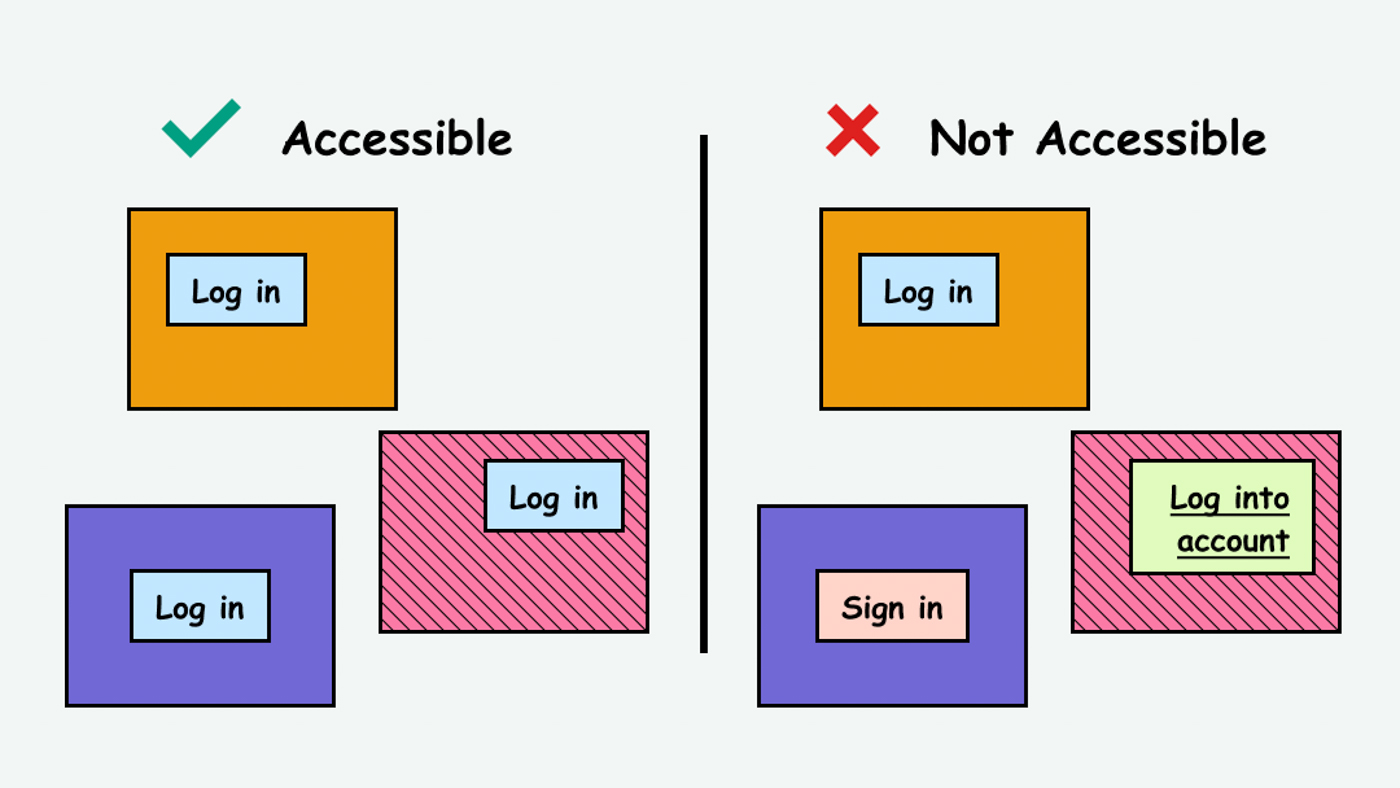

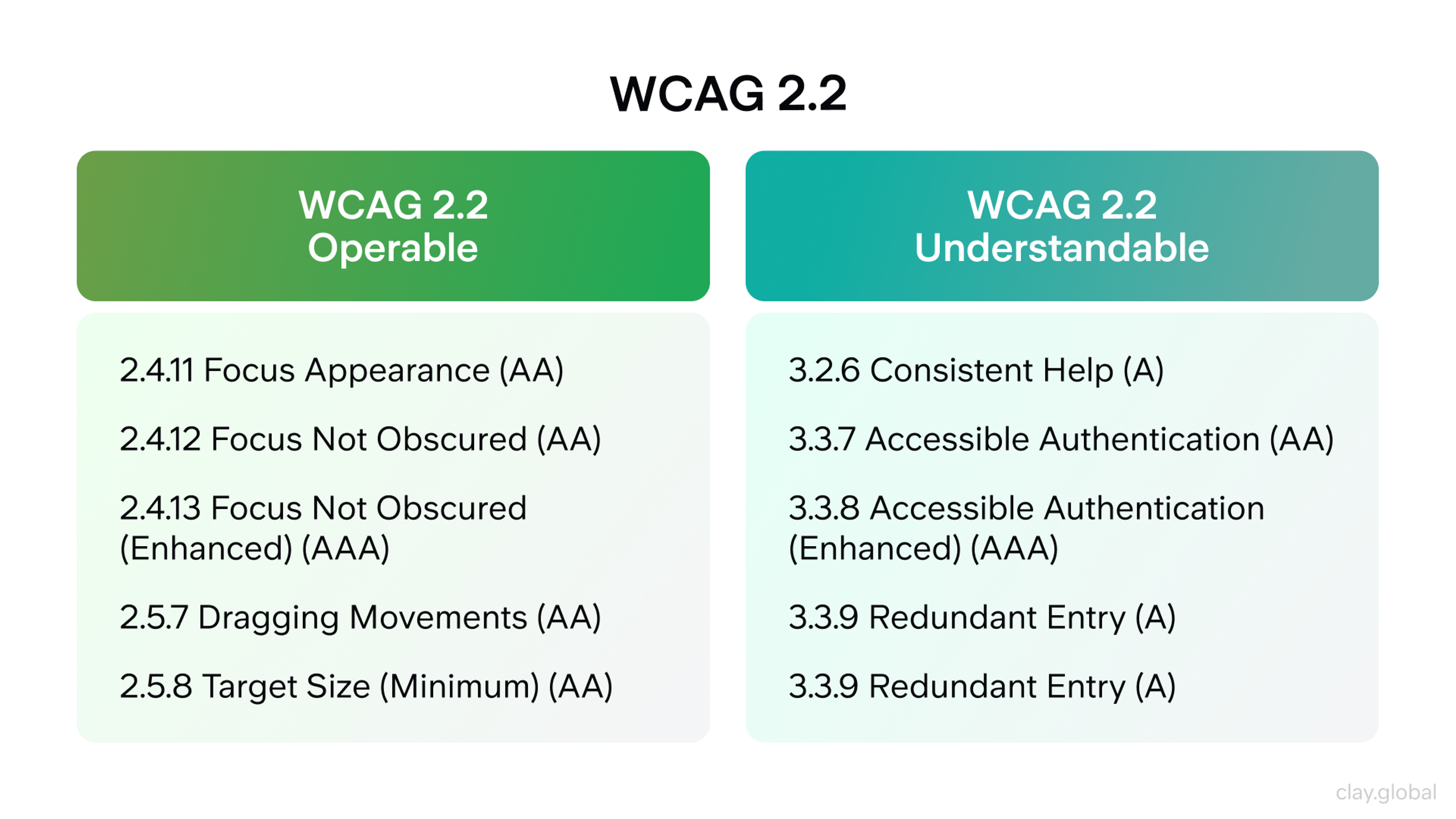

Accessibility and Inclusivity Gaps

Great UX serves everyone, yet many digital products still overlook diverse needs—such as those of users with visual, auditory, motor, or cognitive disabilities. Common oversights include poor color contrast, lack of alt text for images, non-keyboard-navigable elements, or captions missing from videos. AI-generated content exacerbates this by potentially introducing biases from training data, like inaccurate alt text or stereotypical depictions.

In a world saturated with AI tools churning out assets rapidly, ignoring accessibility doesn’t just exclude users—it amplifies inequities and risks perpetuating biases at scale.

Symptoms to watch for:

- Accessibility-related legal complaints or audits flagging issues.

- Lower retention and engagement among underrepresented user groups.

- Missed opportunities in broader markets, as inclusive design expands reach.

Broader impact: Enterprises face reputational risks and regulatory scrutiny (e.g., evolving standards like the European Accessibility Act). Echoing concerns in hype-driven tech adoption, unchecked AI can deepen divides rather than bridge them, turning innovation into unintended exclusion.

Overwhelm from Information Overload

Cluttered interfaces—packed with excessive features, dense text, competing calls-to-action, or endless AI-generated elements—bombard users, making it hard to focus or decide. Generative AI worsens this by flooding experiences with dynamic content, personalized recommendations, or auto-created variations that prioritize quantity over clarity.

Users crave simplicity amid digital noise, yet many products overwhelm, leading to decision paralysis.

Symptoms to watch for:

- User fatigue signals, like quick exits or low scroll depth.

- High cart abandonment in e-commerce or incomplete forms/apps.

- Feedback highlighting confusion or “too much going on.”

Tie to emerging trends: In tech-resistant communities, such as local retail adopting AI cautiously, success comes from human-centric restraint—enhancing experiences without overwhelming customers. Enterprises ignoring this risk alienating users who seek intuitive, uncluttered paths in an increasingly AI-filled world.

Recognizing these pain points early is key in the awareness stage. If they resonate with your projects, you’re not alone—many teams are grappling with AI’s double-edged impact on UX coherence and user satisfaction.

The Amplifying Role of AI in UX Challenges

Generative AI promises to revolutionize digital experiences, but its rapid integration is introducing new layers of complexity. As tools automate content creation and personalization, they’re also amplifying UX challenges in ways that traditional design processes weren’t built to handle. Recognizing these emerging issues is crucial in the awareness stage, especially as enterprises race to adopt AI without fully addressing the fallout.

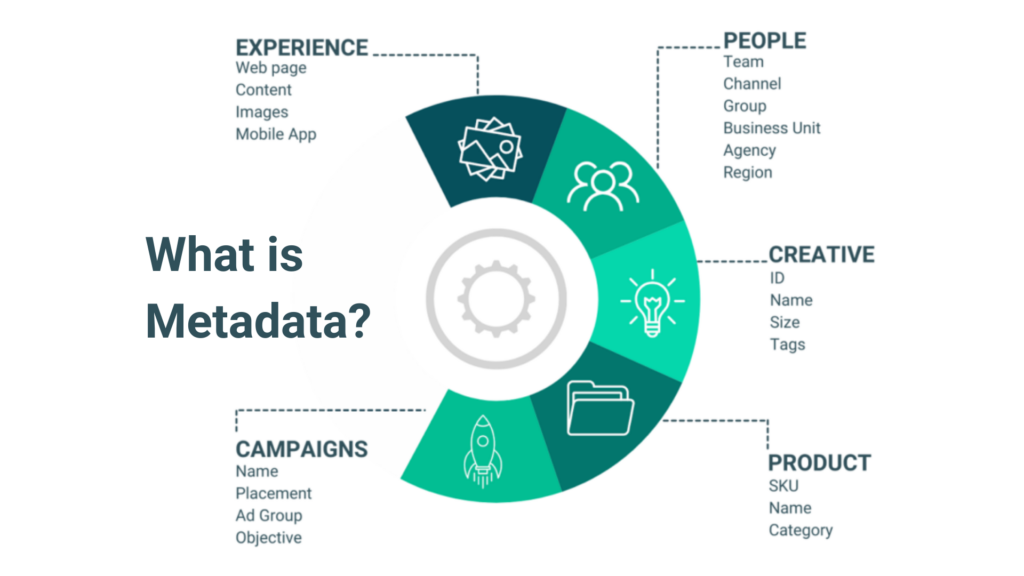

Generative AI and Content Proliferation

Generative AI tools are democratizing creation, enabling the rapid production of vast amounts of content—text, images, videos, and even interactive elements. While this boosts efficiency, it makes maintaining a cohesive user experience exponentially harder. Without robust governance, AI-generated assets can vary wildly in style, quality, and alignment, leading to fragmented interfaces where elements feel disjointed or off-brand.

In AI-enhanced apps, this proliferation often manifests as inconsistent tone (e.g., formal copy next to casual AI outputs), mismatched visuals (varying color palettes or resolutions), or unexpected functionality (dynamic elements that don’t integrate smoothly).

Symptoms to watch for:

- Growing user distrust from perceived “sloppiness” or unreliability in the interface.

- Feedback highlighting confusion over varying content quality or style shifts.

- Declining engagement as users encounter jarring or irrelevant AI-generated elements.

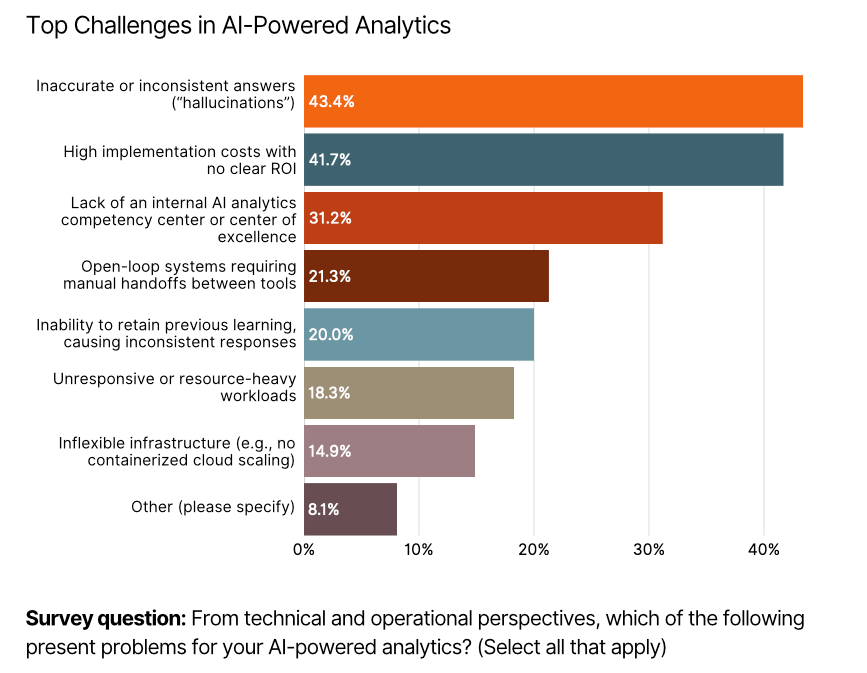

Broader implications: Investor warnings throughout 2025 have drawn parallels between unchecked AI hype and past bubbles, likening overinvestment in generative technologies to the subprime mortgage crisis—where short-term gains masked systemic risks. Similarly, unchecked AI content proliferation can erode user satisfaction and brand loyalty, leading to “crashes” in retention and trust when experiences feel impersonal or chaotic.

.webp)

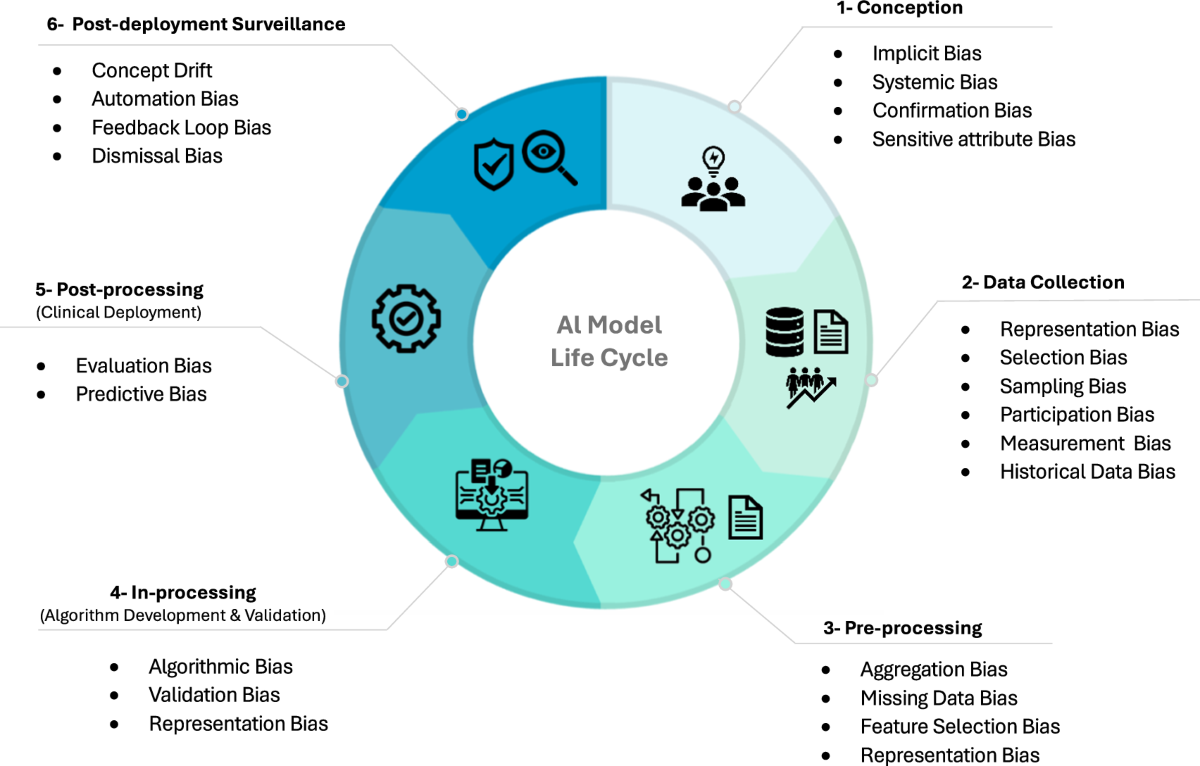

Bias and Ethical Concerns in AI-Driven Designs

AI algorithms, trained on vast but often imperfect datasets, can inadvertently perpetuate biases in UX elements—like skewed recommendation engines that favor certain demographics, stereotypical imagery in generated visuals, or interfaces that disadvantage underrepresented users.

This results in experiences that feel exclusionary, such as personalized feeds reinforcing echo chambers or designs assuming a “default” user profile (e.g., able-bodied, Western-centric).

Symptoms to watch for:

- Uneven experiences across user segments, with complaints from affected groups.

- Social media backlash or public callouts highlighting discriminatory outputs.

- Reputational hits from perceived insensitivity or unfairness.

Broader view: Amid growing scrutiny, initiatives in 2025—like MIT’s Responsible AI for Social Empowerment and Education (RAISE), UNESCO’s global efforts to promote ethical AI in education, and U.S. executive actions advancing AI literacy—signal increasing awareness of these pitfalls. These programs emphasize education and frameworks to mitigate bias, underscoring that ethical design isn’t optional in an AI-driven world.

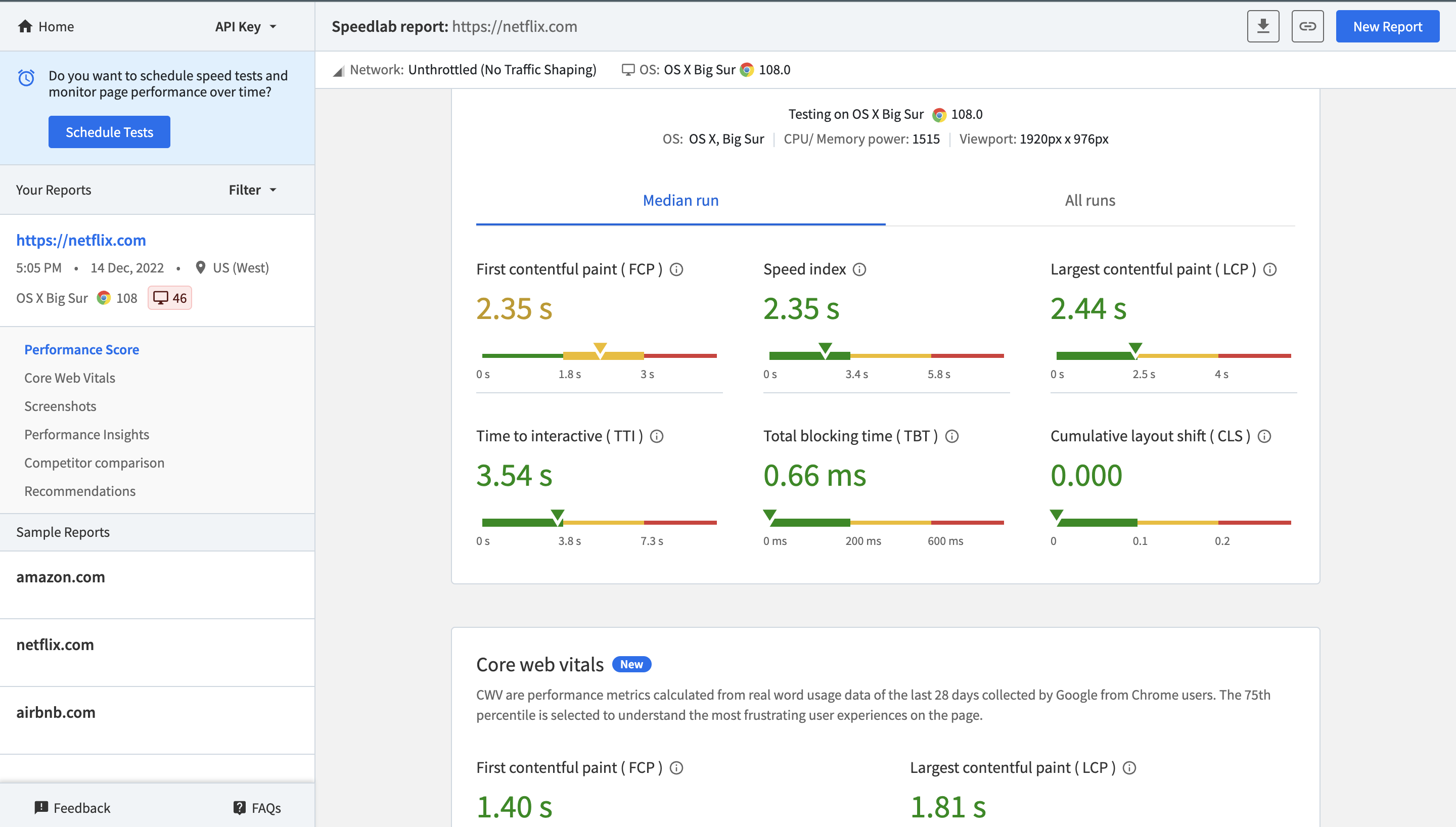

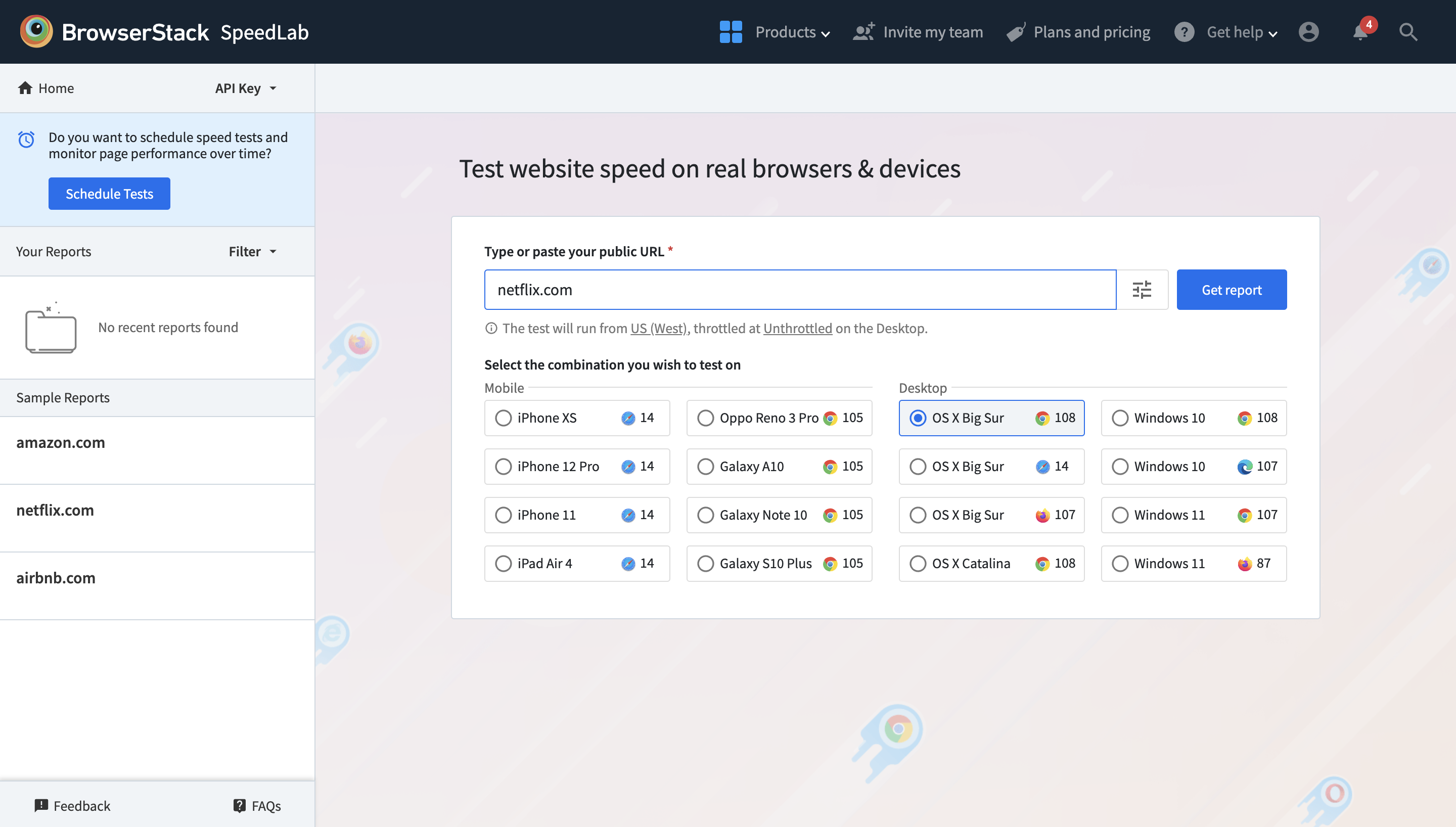

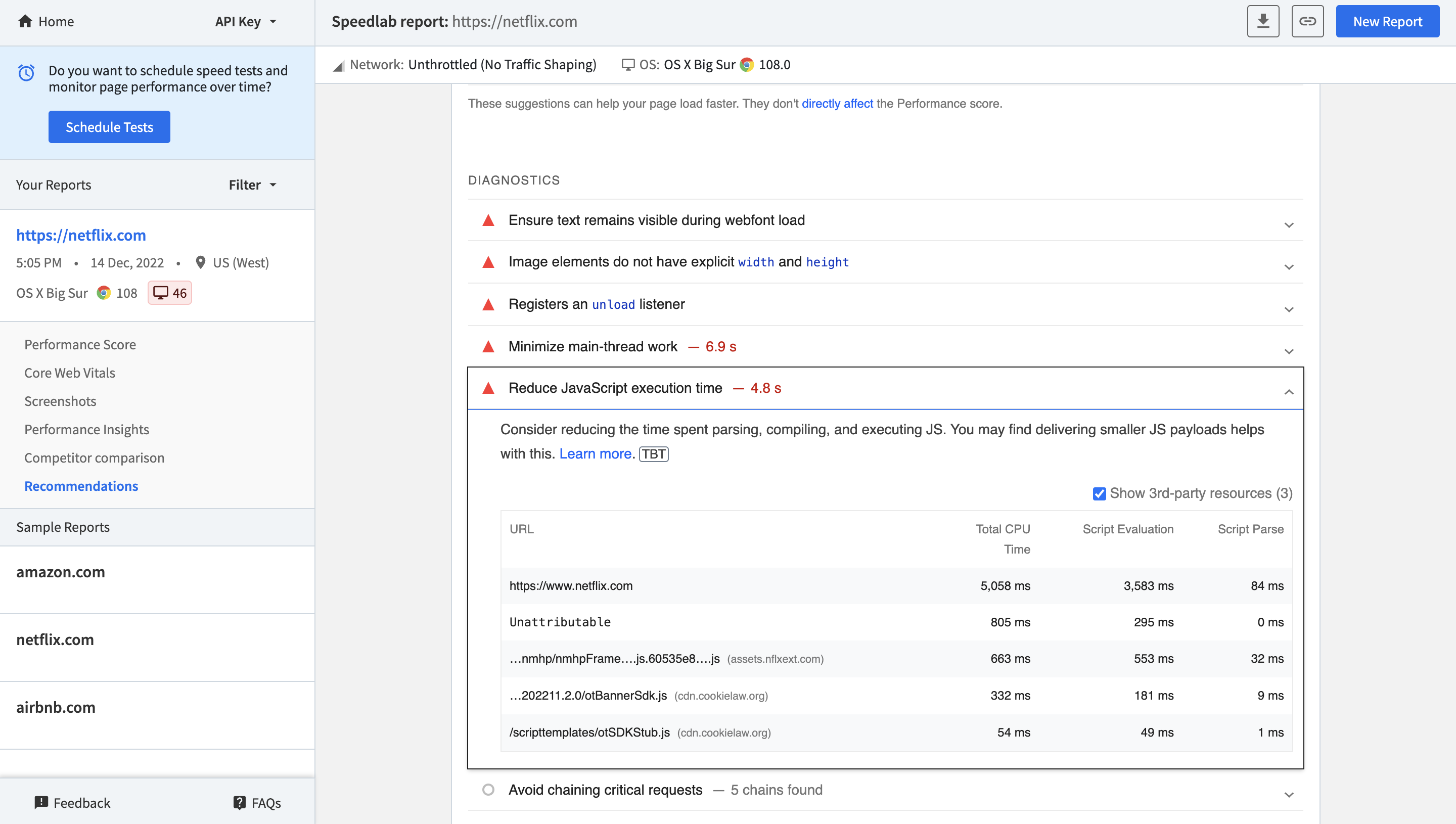

Performance and Optimization Hurdles

Real-time AI personalization—tailoring content, layouts, or recommendations on the fly—demands significant computational resources, often leading to delays in loading times, laggy interactions, or drained device batteries.

In resource-intensive apps, this can manifest as sluggish page transitions, delayed responses to user inputs, or irrelevant suggestions due to processing bottlenecks.

Symptoms to watch for:

- High abandonment rates during loading screens or personalized sections.

- User frustration expressed in reviews about “slow” or “unresponsive” experiences.

- Inefficient server/resource usage spiking costs without proportional benefits.

Connection to enterprise realities: Just as marketing teams conduct audits to optimize campaign performance and ROI, UX teams must contend with similar pressures in competitive landscapes. AI features that promise engagement can backfire if they introduce latency, pushing users toward simpler, faster alternatives.

These AI-specific pain points highlight how innovation can inadvertently create new friction. If your team is deploying generative features and noticing these signals, it’s a clear sign that awareness—and eventual action—is needed to harness AI’s potential without compromising the core UX promise of seamless, trustworthy interactions.

Industry-Wide Implications and Warning Signs

Beyond user frustration, unresolved UX challenges carry significant downstream consequences for organizations. In an AI-accelerated environment, where digital experiences scale rapidly, these issues compound—turning minor inefficiencies into substantial financial and strategic risks. Recognizing these broader impacts is essential in the awareness stage, as they often signal deeper systemic vulnerabilities.

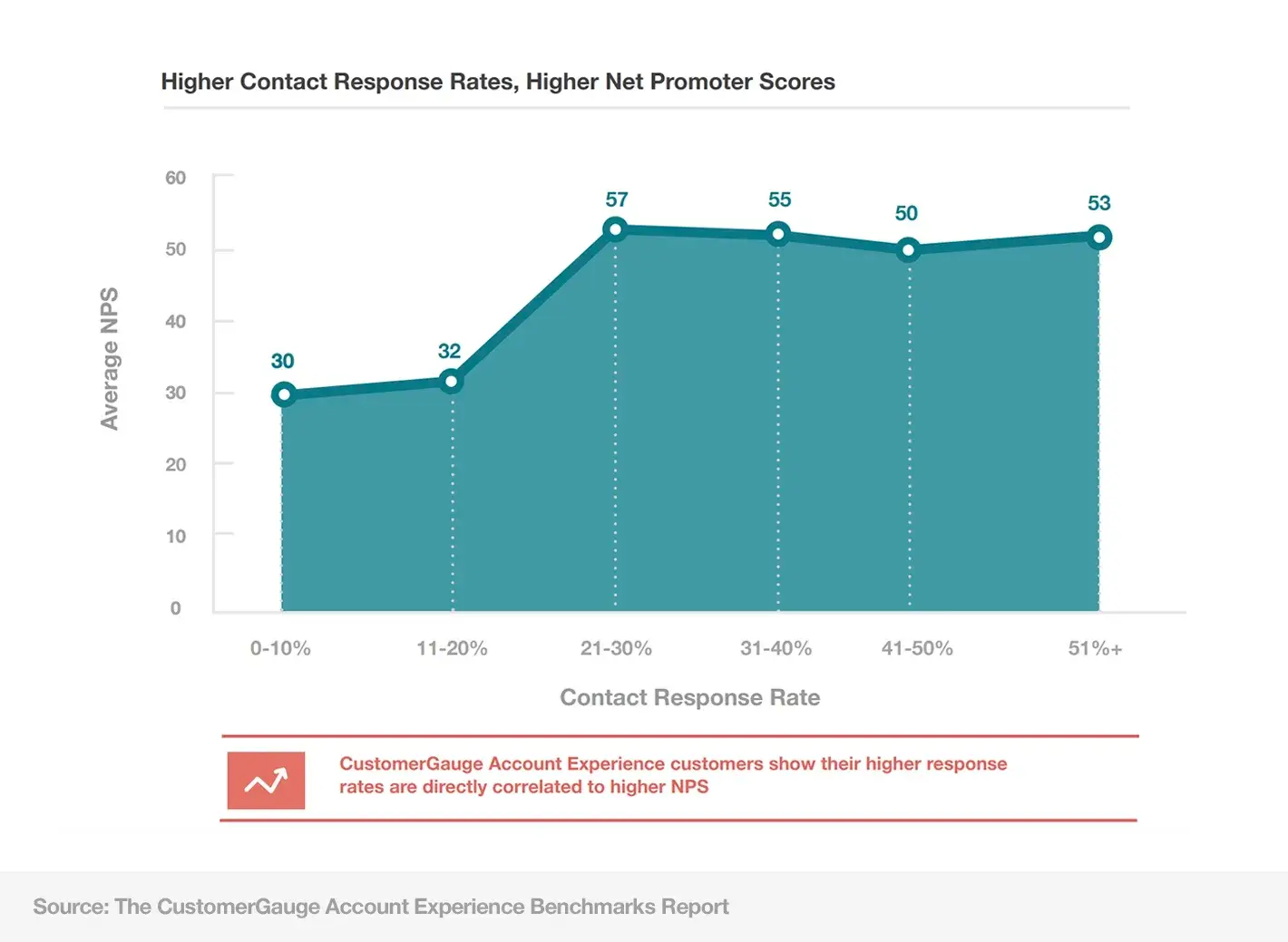

Economic and Operational Risks

Poor UX doesn’t just annoy users; it directly erodes the bottom line through wasted development resources, missed revenue opportunities from reduced conversions, and elevated churn rates. AI amplifies this at scale: generative tools enable faster feature rollout, but without cohesive design oversight, investments in personalization or dynamic content often fail to deliver proportional returns, leading to sunk costs in tech stacks and retraining.

Symptoms to watch for:

- Declining key metrics, such as Net Promoter Scores (NPS) signaling dissatisfaction or shrinking customer lifetime value from premature departures.

- Spiking operational inefficiencies, like higher support tickets or abandoned digital flows.

Inspiration from market signals: Significant funding into AI compliance and governance platforms in 2025—such as WorkFusion’s $45 million raise for AI-driven financial crime compliance tools and widespread investments in RegTech solutions exceeding billions—underscores investor acknowledgment of these risks. In adjacent fields like marketing, where AI-generated assets can quickly become non-compliant or ineffective, similar waste occurs; the same logic applies to UX, where unchecked AI integration risks diluting returns on digital investments.

Competitive Benchmarking Failures

In an AI-accelerated market, rivals are leveraging generative tools to iterate interfaces faster, introduce hyper-personalized features, and refine experiences at unprecedented speeds. Falling behind means struggling to match these innovations, resulting in outdated products that feel clunky by comparison.

Symptoms to watch for:

- Eroding market share as users migrate to more intuitive alternatives.

- Negative user reviews or social comparisons highlighting competitors’ superior flows or engagement.

Tie-in to investor sentiment: Amid 2025’s growing cautions against AI hype—from reports of 95% of corporate GenAI pilots yielding limited value to warnings of overvaluation in tech sectors—diversified portfolios and grounded expectations prevail. UX emerges as a true differentiator: sustainable not through flashy AI alone, but through reliable, user-validated experiences that build lasting advantage over hype-driven fleeting gains.

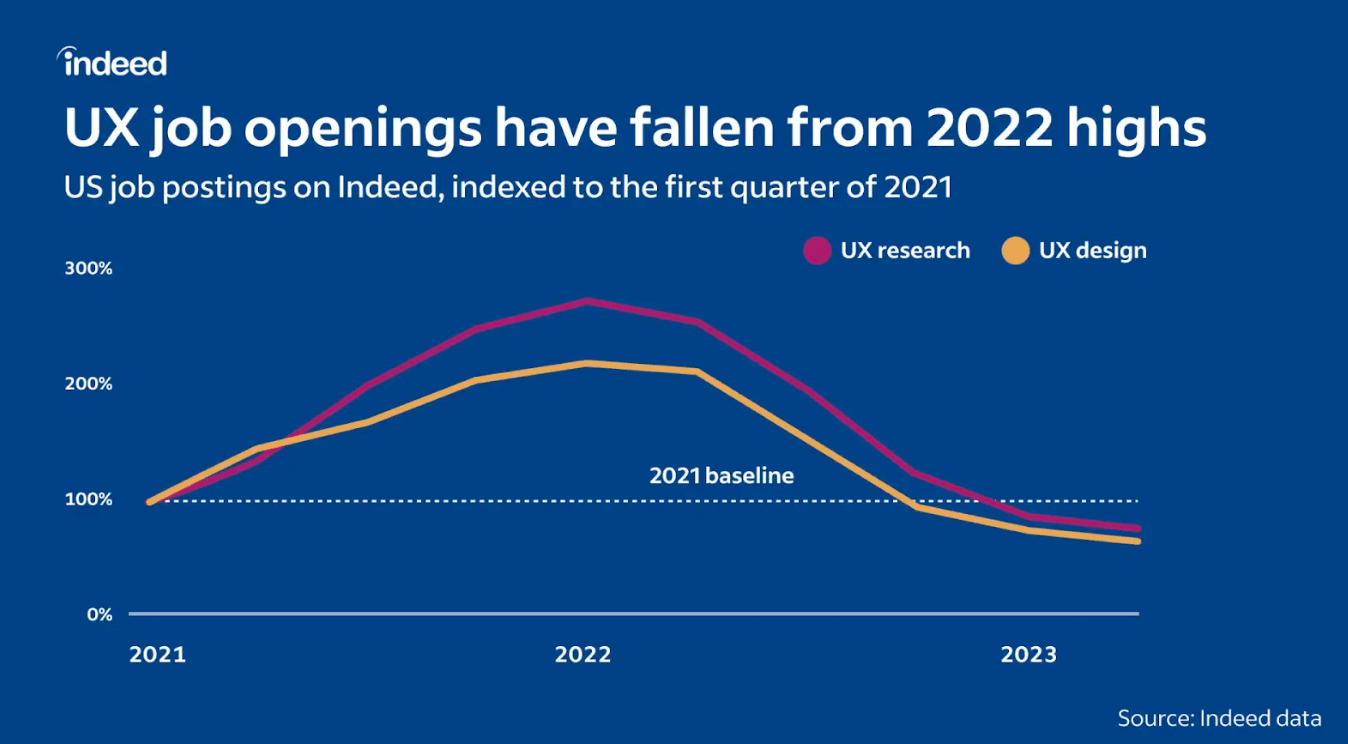

Talent and Ecosystem Gaps

AI’s rapid evolution has outpaced talent development, creating acute shortages in UX professionals skilled in human-AI interaction design, ethical implementation, and adaptive research methods. Teams often resort to over-relying on generalists or outdated tools, yielding subpar AI-integrated designs.

Symptoms to watch for:

- Overworked designers facing burnout from constant firefighting.

- Stagnant innovation, with reliance on generic AI outputs rather than bespoke, user-centered solutions.

- Prolonged hiring cycles or skill mismatches in roles requiring AI literacy.

Broader ecosystem view: While AI hubs see boosts in foundational talent pipelines, gaps persist in specialized UX education and responsible design training—evident in reports of ongoing layoffs, market saturation concerns, and calls for upskilling. Recognizing these shortages early highlights the need for targeted development to bridge human-centric expertise with AI capabilities.

These organizational impacts underscore why UX pain points demand attention now. In the awareness stage, spotting ties to financial drain, competitive erosion, or talent strain can reveal if AI’s promises are translating to value—or merely amplifying vulnerabilities. Identifying these signals positions teams to pivot before costs escalate further.

Real-World Examples and Case Studies

Abstract case studies from various industries illustrate how UX challenges—often amplified by generative AI—can cascade into user dissatisfaction and operational hurdles. These anonymized or hypothetical examples, drawn from patterns observed in global brands and sectors throughout 2025, underscore the importance of early recognition in the awareness stage. Unaddressed issues frequently ripple outward, affecting engagement, trust, and broader perceptions.

Enterprise Marketing Parallels

Large enterprises often deploy generative AI to scale personalized marketing content across channels—emails, social posts, ads, and website elements. In one hypothetical scenario inspired by global consumer brands, a multinational company rolled out AI tools to generate localized campaign visuals and copy for holiday promotions. Initially efficient, the outputs introduced subtle inconsistencies: varying color palettes, off-brand tone shifts (e.g., robotic formality in casual markets), and mismatched messaging that spread via scraped data across touchpoints.

These translated directly to UX ripple effects in user interactions. Customers encountering personalized ads on social media might click through to a website featuring mismatched branding, leading to confusion during browsing or checkout. Similarly, email recipients saw engaging AI-crafted subject lines but landed on pages with disjointed layouts or irrelevant dynamic content.

Symptoms emerging over time:

- Increased drop-offs at key funnels, such as ad-to-site transitions.

- Social feedback noting “soulless” or inconsistent experiences, echoing backlash seen in real campaigns like AI-generated holiday ads criticized for lacking authenticity.

Unrecognized early, these issues contributed to broader problems: diluted brand perception and eroded trust, as users questioned the reliability of interactions in an era where AI inconsistencies proliferated unchecked across marketing ecosystems.

Consumer-Facing Industries

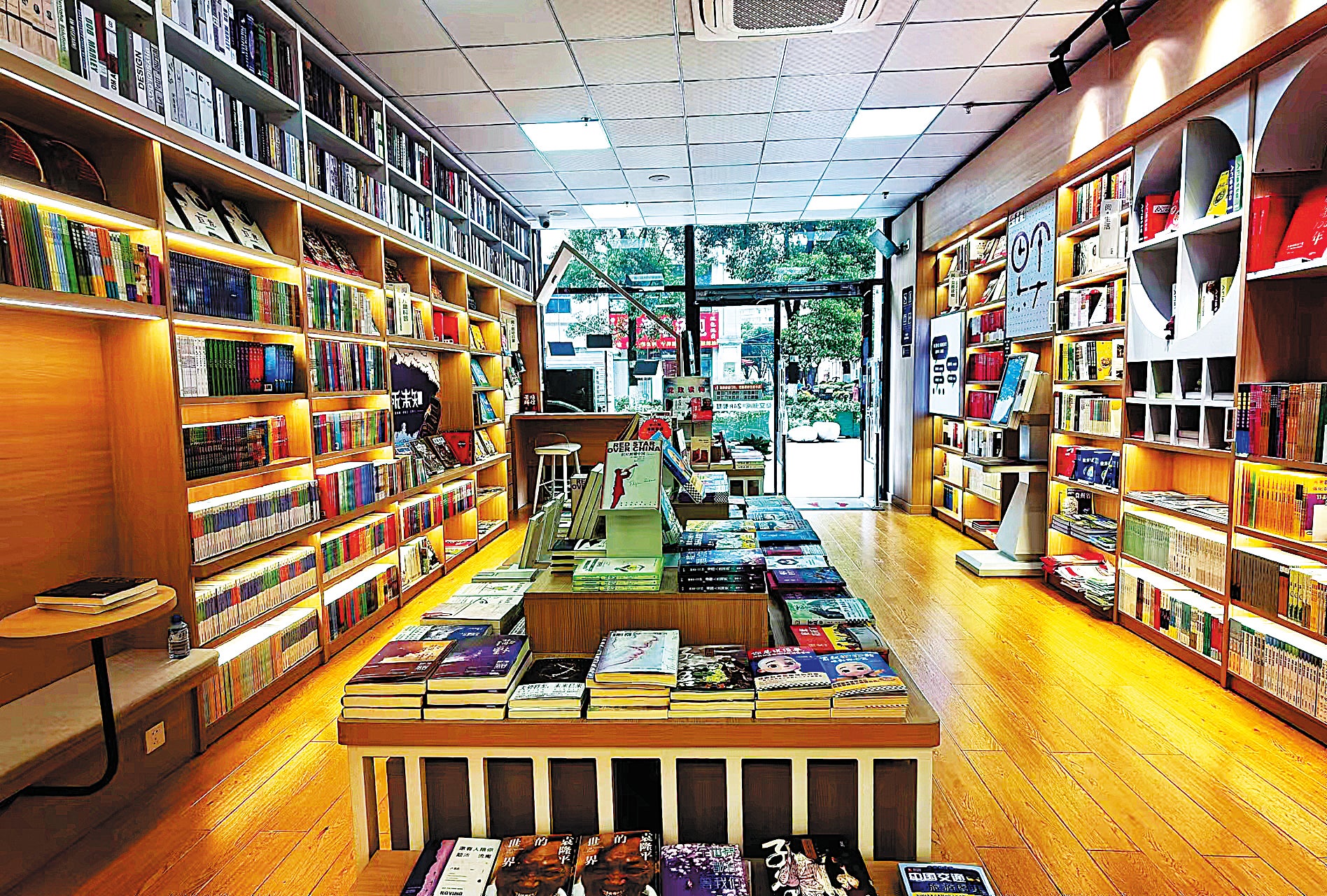

In retail and service sectors, where human connection drives loyalty, many organizations adopt AI selectively—for backend tasks like inventory optimization—while resisting full integration in customer-facing interfaces due to fears of impersonality.

Consider independent bookstores or chains in consumer-facing retail: They leverage AI for efficient stock management and recommendations in apps, predicting demand to avoid overstocking. However, the companion mobile apps often feature AI-driven interfaces—endless personalized feeds, automated chat support, or generative product descriptions—that feel sterile compared to in-store serendipity.

Users browsing the app encounter algorithmic suggestions prioritizing sales over discovery, cluttered screens with dynamic but irrelevant elements, or chatbots lacking nuance in queries about book recommendations. This contrasts sharply with physical stores, where staff interactions foster emotional ties and spontaneous finds.

Resulting UX tensions:

- Lower app engagement and retention, with users preferring in-person visits for the “human touch.”

- Feedback highlighting impersonal experiences, contributing to fragmented omnichannel journeys.

In 2025 trends, such cautious adoption reveals deeper tensions: AI enhances operations but risks alienating customers seeking empathy and relatability in sectors valuing personal connections.

Tech Startup Scenarios

Emerging companies in AI ecosystems frequently prioritize rapid feature development—packing products with generative capabilities like real-time personalization, chat agents, or automated workflows—to attract investment and users. UX often takes a backseat amid the rush to demonstrate innovation.

A hypothetical example mirrors patterns in 2025 AI startups: A new platform launches with advanced AI tools for content creation or task automation, boasting impressive demos. Post-launch, however, users encounter cluttered interfaces overwhelmed by options, inconsistent AI outputs requiring constant corrections, or laggy performance from unoptimized integrations.

Early adopters provide feedback loops revealing design flaws: confusing navigation buried under features, unreliable interactions eroding trust, or overwhelming choices leading to paralysis.

Common symptoms:

- High initial sign-ups followed by rapid churn.

- Review highlights of “flashy but frustrating” experiences, with post-launch iterations struggling to catch up.

These scenarios show how overlooking UX in favor of AI features can lead to early stumbles, amplifying vulnerabilities in competitive landscapes where user satisfaction determines survival.

Spotting parallels in your own context—whether enterprise-scale inconsistencies, sector-specific impersonality, or startup feature overload—is a vital awareness signal. These cases remind us that AI’s rapid advancement can expose and intensify UX gaps if not monitored closely.

Conclusion

The rapid proliferation of generative AI in 2025 has magnified longstanding and emerging UX pain points, making them more visible and urgent than ever. Core challenges include inconsistencies across touchpoints causing confusion and drop-offs, accessibility gaps excluding diverse users, and information overload from cluttered, AI-flooded interfaces leading to fatigue.

AI-specific issues further heighten the stakes: content explosion creating chaos and distrust, biases perpetuating ethical concerns, and performance hurdles from real-time demands causing frustration. Organizationally, these manifest as economic risks with declining metrics, competitive benchmarking failures, and talent gaps overwhelming teams. As AI trends accelerate content creation and personalization, these vulnerabilities are no longer subtle—they demand immediate recognition.

A Call to Reflection

Take a moment to audit your own products, apps, or team processes. Are you seeing high bounce rates, accessibility complaints, overload feedback, inconsistent AI outputs, bias signals, slowdowns, metric dips, market lag, or skill shortages? Spotting these symptoms in your context is the foundational step in the buyer’s journey—acknowledging the problem before exploring paths forward.

Looking Ahead

Simply recognizing these amplified pain points clears the fog, opening doors to deeper consideration of priorities and informed decision-making in UX evolution. The awareness stage is where transformation begins.

Final Thought

In a digital future increasingly shaped by AI, balanced adoption stories—from cautious retailers preserving human connections to enterprises investing in governance—offer optimism. With mindful awareness as the compass, teams can navigate challenges to build experiences that are not just innovative, but truly human-centered and sustainable.

Thank you for joining this exploration. Awareness today paves the way for thriving tomorrow.

Leave a Reply